Revisions to estimates reported in earlier months are also reported, but typically get much less attention. There are normally two rounds of revisions to each month’s data, reported one and two months after the initial report as more complete data become available.

It seemed to me that there was a tendency for these revisions to be “upward”, that is, for the initially-released numbers to have been too low. So I tracked down the Employment Situation reports between February 2005 and January 2006 and collected the numbers.

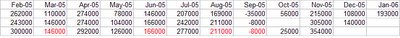

The numbers are below. Initial reports are the top numbers, first revisions the middle, and second (final) revisions at bottom. Numbers in red were not given in the report two months after they were initially reported; my assumption is that they were revised only once, and I have carried them down for consistency of format.

And yes, between February and December (and counting December, even though only one revision has come out), upward revisions were the norm, occurring in ten of eleven months (October was the exception), with a mean change between the initial release and the final reported revision of just under 40,000 (including the downward revision in October).

In case you’re curious about how likely that is, I’ll save you some trouble; the “pure chance” probability of getting more than 9 revisions out of 11 in the same direction (assuming no actual tendency for them to be in a particular direction) is about 0.01. Bear in mind, however, that my choice of these months was not random – I had a sense that the revisions were disproportionately upward, which was why I pulled a year’s worth of numbers, and the months were also consecutive rather than truly randomly selected.

Still, the data suggest that the BLS establishment survey systematically undercounted jobs created in its initial reports over the last year. I have no idea why this would be the case. I doubt it is politically motivated, but it may, of course, have political ramifications.

14 comments:

"I doubt it is politically motivated"

Why? That's a serious question. When I was researching the Barrett Report I came to understand that the National Archives was "slow" in posting the actual documents concerning the Clinton scandals. They couldn't even quite bring themselves to post the Starr Report. As I returned to the site I noticed that archived information concerning Justice Robert's and Justice Alito's work was being dredged out at a rather astonishing rate (for an archive).

I wonder if it would be inaccurate to say that Federal civilian employees are Dem adherents to a greater extent than members of the military are Rep adherents?

While I understand that the revisions may be due to an improper entry or collection method being used I would certainly not rule out political motivation. A review of the same months in '96 for similiar discrepancies might be helpful.

I thoroughly enjoy your data presentations and hope that you do more of them.

RogerA,

How would you test a hypothesis of malice or the lack thereof? I agree that aggregating a large amount of data is quite a task - but it's a very repetitive task that should improve (in terms of accuracy) over time. The BLS has been doing it for a very lonnng time.

The utility of information provided is greatly diminished by inaccuracy and the BLS initial numbers are (as you note) given great play in the news. Some times the numbers affect the market, so inaccuracy carries a price.

DT,

Wasn't that a case of the MSM deteminedly not looking at numbers that were released? If I remember correctly, the first and second quarter reports for '92 showed that the 'hiccup' recession had ended but the MSM never seemed to get around to reporting it.

It's about the same today - we're in the middle of a long smooth expansion and you would never know it from reading the MSM.

David,

What you say is true.

But a major factor in that election--let's not forget it--was that Bush I was sick of being President and didn't really want the job anymore. His campaign was purely perfunctory. His apathy or distaste showed in every speech he gave, in every campaign stop he made. This was probably the most major factor and caused people to look elsewhere for something new and interesting. Clinton was certainly that.

I suppose you would have to check a similar series from Clinton's time in office to claim bias, though one does wonder where the extra jobs come from every month.

Well to be honest the only way we could have real evidence of malice or political manipulation would be to check out the numbers when Clinton was in office.

My ex-brother in law has worked for the Justice Department since 1971 and he is a Republican. He has outlasted a lot of presidents and he is deligent in regards to his job no matter who is in office.

It is not really fair to assume that everybody in the Labor Dept. is part of some conspiracy. This whole thing might have another explanation.

I really do not know.

Chuck - If I remember correctly the standard BLS reason given is "late receipt" of reports. The problem with that is that a data series like that can be reduced to a set of probabilities that would allow for interpolation with a high prbability of being very accurate. There may be a bureaucratic "rule" preventing interpolation but I don't believe that there is a "real" rule preventing it.

The series is just too long for it not to be susceptible to effective analysis.

Terrye,

I agree - that's why an examination of '96 would be interesting. I don't know where to find the revision numbers or I would do it myself. The National Archives deal is real though - and easy to verify.

Extrapolate - there's an unknown involved. Although that's probably not precise, either - "accurately estimate" might be better.

Rick, there's a ... philosophical, I guess is the word here ... problem to reporting an extrapolated or predicted number: these are supposed to be real numbers. That's why they report them as "preliminary" -- they know the final numbers will be different, You'll also hear about "seasonally-adjusted" numbers, and those are an extrapolation.

The real issue is that the legacy media tends to announce the preliminary numbers as if they were final, and make less of the final numbers.

One way for an unbiased press to handle this would be to note what Morgan has just explained in one phrase. For example:

"Today, the BLS released its preliminary jobs report for the month of _____________. The increase in payroll jobs, according to the report was ___________ jobs. Over the past year, preliminary figures have averaged about __ percent of the final numbers. The projected final report based on that average is ____________ jobs."

I want to make clear that when I titled the post "...downwardly biased...", I was using biased in the statistical sense, not the political one.

The BLS has reports archived and available on line at least back to December of 1995:

www.bls.census.gov/cps/pub/empsit_1295

So it is at least theoretically possible to look back a decade.

The (p) numbers, if not getting a full body massage, are certainly getting a good rubdown:

The sample-based estimates from the establishment survey are adjusted once a year (on a lagged basis) to universe counts of payroll employment obtained from administrative records of the unemployment insurance program.

The difference between the March sample-based employment estimates and the March universe counts is known as a benchmark revision, and serves as a rough proxy for total survey error.

It may just be that last years benchmark revision was off. I still haven't found a data set with the monthly adjustments in table form.

Morgan:

Using the table data going back to '80 there is a funny anomaly between even and odd numbered months. Odd numbered months average 213K jobs added (over the 26 years) while even numbered months average 18K. Now that's a statistical bias. Maybe.

Rick,

I also couldn't find a dataset that included the revisions.

If you compare the numbers I presented with the numbers in the dataset you pulled, you'll see that they have often undergone fairly major revisions based on the annual (?) revisions - I don't know how they accomplish those.

The even-odd month thing is, on its face, astonishing. I'll have to take a look at it.

Hmmm. This may help to explain things (from Rick's link):

Another major source of nonsampling error in the establishment survey is the inability to capture, on a timely basis, employment generated by new firms. To correct for this systematic underestimation of employment growth, an estimation procedure with two components is used to account for business births. The first component uses business deaths to impute employment for business births. This is incorporated into the sample-based link relative estimate procedure by simply not reflecting sample units going out of business, but imputing to them the same trend as the other firms in the sample. The second component is an ARIMA time series model designed to estimate the residual net birth/death employment not accounted for by the imputation. The historical time series used to create and test the ARIMA model was derived from the unemployment insurance universe micro-level database, and reflects the actual residual net of births and deaths over the past five years.

So maybe new firms are being generated more quickly now than the model predicts based on the past five years of data, causing the estimated number employed by them to be too low, and hence subject to upward revision as these "new firms" become established firms.

Post a Comment